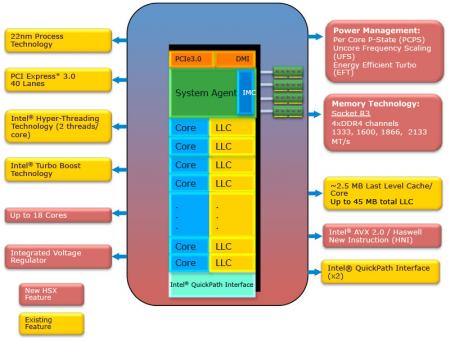

Last year, Intel introduced its next-generation microarchitecture codenamed Haswell. While the 1st rollout of this new microarchitecture focused on desktop and notebook platforms, Intel has just recently released new Haswell CPUs specifically designed to address the dual processor server market. This new roll-out of Haswell-based CPUs, the Intel Xeon E5-2600 V3 processors, illustrate Intel’s commitment to pushing performance standards higher and in turn, elevating the level at which its OEM partners must perform (Figure 1).

Of course, marching in lock-step with Intel inevitably pays dividends for its partners as well as the customer base. In other words, it’s safe to assume with many new product launches that performance will increase handily, while the pricing of the upgraded architecture actually becomes more favorable for consumers, offering better performance at the same price point of their previous upgrades.

Overview of Intel Xeon Processor E5-2600 v3 product family (Haswell). Courtesy Intel.

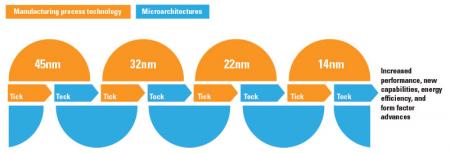

Key to this formula is Intel’s unique “Tick-Tock” model, which delivers continued innovations in manufacturing process technology and processor microarchitecture in alternating “tick” and “tock” cycles. A “tick” cycle advances manufacturing process technologies to enable new capabilities such as higher performance and greater energy efficiency within a smaller, more capable version of the previous “tock” microarchitecture. The alternating “tock” cycle uses the previous “tick” cycle’s manufacturing process technologies to introduce new innovations in processor microarchitecture to improve energy efficiency and performance, as well as functionality and density of features (Figure 2).

Intel’s “tick-tock” model alternates between improvements to manufacturing/process technology and enhancements in processor microarchitecture. Courtesy Intel.

While this provides a roadmap for increased performance, it also helps Intel partners maintain some predictability in their own product cycles as they strive to embed technologies that match the performance standards of Intel’s latest product launch.

More Performance and Better Value

So, what does this mean for customers and the OEMs that support them? To better understand the translation, let’s consider the latest Intel microarchitecture release codenamed Haswell. The E5 2600 V3 processors featured as part of this release offer up to 18 physical cores per CPU. Along with more cores come faster memory and a faster interconnect between the CPUs in the system. This provides substantially more compute power, which will enable 40 GbE to be a practical reality in standard server platforms. This is a tremendous benefit when you consider growing trends like the “Internet of Things” that continually increases network throughput. With more traffic and more systems to process, 40GbE provides the ability to add more capability to systems – rather than adding more servers – to handle this increase in traffic.

E5-2600 V3-based servers also bring a needed increase in performance in non-volatile storage technology with native support for DDR4 NVDIMMs. DDR4 NVDIMMs provide an industry-standard way of storing data in high-speed RAM without the fear of losing the data in the event of a power or node failure. In addition to DDR4 NVDIMM support in new E5-2600 V3-based dual processor server platforms, the performance of SSDs is increasing with the addition of NVMe support, giving multi-lane PCIe access directly to SSD storage. This results in lower latency and provides more bandwidth to a solid state drive than SATA or SAS would, in effect creating multiple tiers of high performance storage depending on what each unique system actually requires. Unlike some previous generations of PCIe SSD storage, NVMe SSDs are offered in hot-swap 2.5” hard drive form factors, as well as traditional PCIe add-in Cards (AICs). In this new 2.5” form factor, OEMs are able to take advantage of the newer, high performance SSD architecture while maintaining high levels of serviceability and drive density typically associated with SAS and SATA drive types.

For customers seeking higher performance, the cost typically becomes more expensive per gigabyte. A logical approach to managing these costs is via a tiered storage model that keeps the most frequently accessed data on the most expensive tier, where fast access is possible, and moves less sensitive data to outer tiers which have reduced performance but lower costs per gigabyte. While the value component improves with each subsequent release, so does the process behind which those upgrades are delivered. By providing customers with the next generation of Intel processors and ensuring the corresponding system upgrades incorporate the latest microarchitecture, Intel delivers a balanced platform that prevents choke points from developing.

Creating New Levels of Efficiency

As new technologies emerge, along with the new microarchitecture, it helps design partners like Unicom Engineering plan the customers’ next transition for increased performance at near the same price, or substantially increased performance over their previous generation of products to keep their solution on the cutting edge .The corollary to this is if customers do not require increased performance, then it is possible to scale them into a lower tier system so there is the potential of paying less for the same performance level they are accustomed to.

In looking at three rapidly-growing markets – storage, information security and communications – each require more capacity and all industries expect to acquire it at a decreasing cost per session, gigabyte of storage, and gigabit of throughput. Having more cores and bandwidth available in a system means each node can have more throughput as a whole, allowing a storage appliance, for example, to handle more directly connected bulk storage.. More CPU cores, more network bandwidth, and more memory bandwidth means customers can go to a higher sequence of either encryption or security transactions. Simply put, each system can perform more work or handle more bandwidth.

Looking specifically at the communications infrastructure, Intel’s customers can do more transcoding, more encryption, and more ostentations with the new Haswell microarchitecture in a single box than they’ve ever done before. They can handle more subscribers, or the same number of subscribers at a higher workload than possible under the previous platform. For roughly the same power, price and physical footprint, they can handle more transactions.

Intel’s Commitment to Partners Runs Deep

Not only is there the ability to choose between greater power and performance, but also the ability to match price points to specific performance needs. For example, if a customer was seeking a brand new architecture, OEMs can recommend they hold off for the appropriate number of months until they can get Intel’s latest and greatest. Plus, as the actual product release draws near, Intel begins to feed more details into the marketplace: 18-24 months out, the projected timing of the new technology cut-in date is known; one year out, it becomes clearer; 6 months usually signals final feature decisions and 3 months until launch, everything is locked down. Thirty days prior to the product launch, all pre-production equipment has been evaluated. By maintaining this consistency in upgrade deployments, Intel OEM partners can better advise customers on what technology investments to make and when.

Intel’s Embedded Alliance is another innovation the company provides to OEM partners in order to maximize the potential of new upgrades and releases. Affording its partners access to all of the products on the Intel Embedded roadmap lets OEMs know that Intel will guarantee availability of those products for at least seven years after product launch. Some customers want an architecture that will be stable for a long time, enabling OEMs to leverage their relationship with Intel to help customers choose products that are going to be available for multiple years. This drives stronger relationships across the distribution chain, and builds trust in Intel’s solutions, even as they continue to drive new levels of architecture performance.

The Haswell E5-2600 V3 CPUs are built on the same Intel 22nm tri-gate transistor technology process as the previous release of E5-2600 V2 Ivy Bridge processors, so the manufacturing process maturity enables the release of new features on a stable platform. Intel makes this possible by adhering to a very predictable schedule that sees the release of the next CPU every 18-24 months. Additionally, the majority of the time that there is a new “Tick” process die shrink, the new model CPUs are able to be installed into the same physical platform as the “Tock” microarchitecture that proceeded them. The motherboards that take V3 CPUs are expected to take future generation “V4 CPUs” with little or no platform modifications required (Figure 3). So, now is the time for OEMs to begin working with customers to evaluate the new architecture that is going to handle two generations of CPUs and is going to be the primary platform architecture for the next 3.5 to 4 years. This is a busy time for OEMs as they simultaneously work with the existing Intel platforms while being cognizant of the soon-to-be-changing microarchitecture.

Example of advanced motherboard with V3 CPU and the ability to readily accept the next generation V4. Courtesy Intel.

In many ways, customers and OEM partners move with Intel on a rapidly shifting paradigm. But Intel’s focus on maintaining productive relationships with all members of its deployment chain ensures its performance is always an asset and not a hindrance to supporting evolving requirements for increased productivity at a lower cost threshold.

UNICOM Engineering

Canton,MA.

(781) 332-1000

www.unicomengineering.com